3 Research-Driven Advanced Prompting Techniques for LLM Efficiency and Speed Optimization

Image by Freepik

Large Language Models (LLMs) like OpenAI’s GPT and Mistral’s Mixtral are increasingly playing an important role in the development of AI-powered applications. The ability of these models to generate human-like results makes them the perfect assistants for content creation, code debugging, and other time-intensive tasks.

However, one common challenge faced when working with LLMs is the possibility of encountering factually incorrect information, popularly known as hallucinations. The reason for these occurrences is not far-fetched. LLMs are trained to provide satisfactory answers to prompts; in cases where they can’t provide one, they conjure up one. Hallucinations can also be influenced by the type of inputs and biases employed in training these models.

In this article, we will explore three research-backed advanced prompting techniques that have emerged as promising approaches to reducing the occurrence of hallucinations while improving the efficiency and speed of results produced by LLMs.

To better comprehend the improvements these advanced techniques bring, it’s important we talk about the basics of prompt writing. Prompts in the context of AI (and in this article, LLMs) refer to a group of characters, words, tokens, or a set of instructions that guide the AI model as to the intent of the human user.

Prompt engineering refers to the art of creating prompts with the goal of better directing the behavior and resulting output of the LLM in question. By using different techniques to convey human intent better, developers can enhance models’ results in terms of accuracy, relevance, and coherence.

Here are some essential tips you should follow when crafting a prompt:

- Be concise

- Provide structure by specifying the desired output format

- Give references or examples if possible.

All these will help the model better understand what you need and increase the chances of getting a satisfactory answer.

Below is a good example that queries an AI model with a prompt using all the tips mentioned above:

Prompt = “You’re an expert AI prompt engineer. Please generate a 2 sentence summary of the latest advancements in prompt generation, focusing on the challenges of hallucinations and the potential of using advanced prompting techniques to address these challenges. The output should be in markdown format.”

However, following these essential tips discussed earlier does not always guarantee optimal results, especially when dealing with complex tasks.

Leading researchers from prominent AI institutions like Microsoft and Google have divested a lot of resources into LLM optimization, i.e., actively studying the common reasons for hallucinations and finding effective ways to address them. The following prompting techniques have been found to provide better and context-aware instructions to the studied LLMs, thereby increasing the chances of getting better relevant results and also reducing the likelihood of getting inaccurate or nonsensical information.

Here are some examples of research-driven advanced prompting techniques:

1. Emotional Persuasion Prompting

A 2023 study by Microsoft researchers found that using emotional language and persuasive prompts, called “EmotionPrompts,” can improve LLM performance by over 10%.

This style adds a personal, emotional element to the given prompt, transforming the request into one that’s highly important with significant consequences for the results. It’s almost like talking to a human; using an emotional angle helps communicate the importance of the task, stimulating deeper focus and commitment. This strategy can be useful for tasks requiring higher problem-solving and creativity skills.

Let’s take a look at a simple example where emotion is used to enhance the prompt:

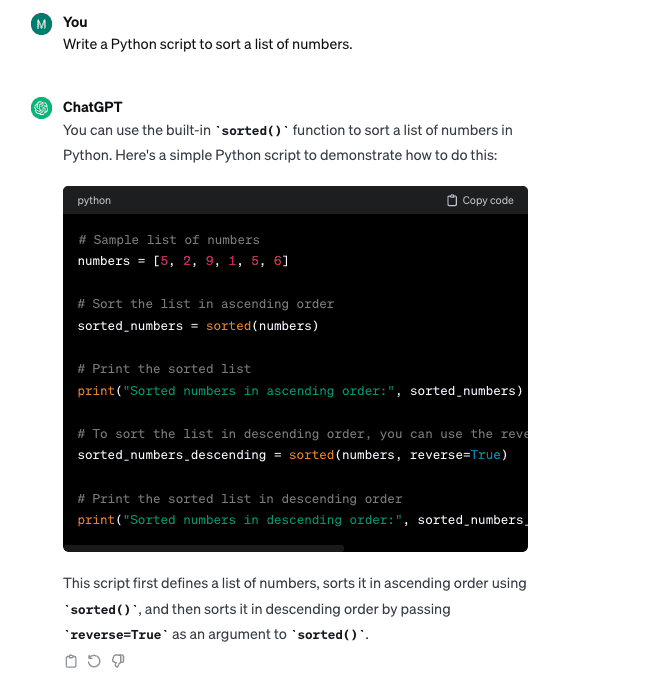

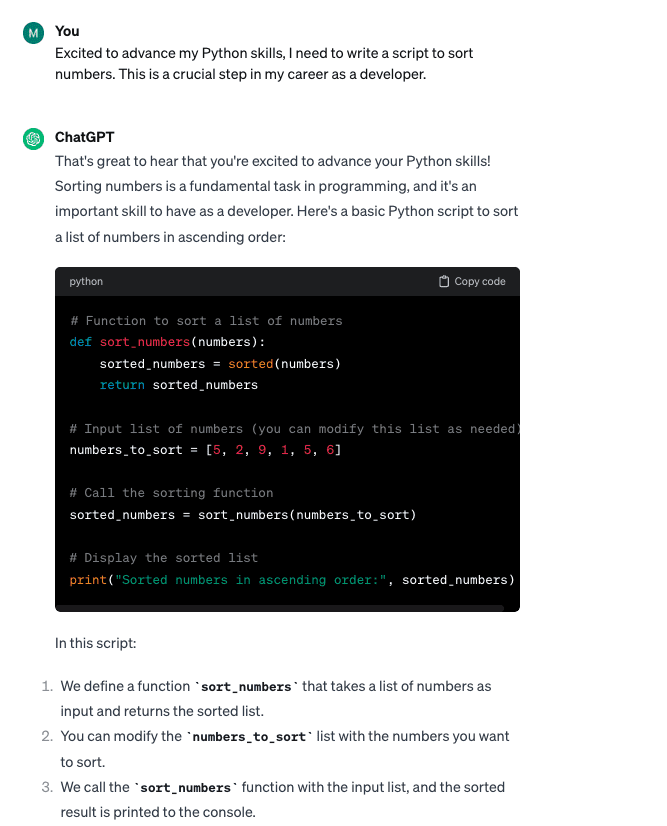

Basic Prompt: “Write a Python script to sort a list of numbers.”

Prompt with Emotional Persuasion: “Excited to advance my Python skills, I need to write a script to sort numbers. This is a crucial step in my career as a developer.”

While both prompt variations produced similar code results, the “EmotionPrompts” technique helped create a cleaner code and provided additional explanations as part of the generated result.

Another interesting experiment by Finxter found that providing monetary tips to the LLMs can also improve their performance – almost like appealing to a human’s financial incentive.

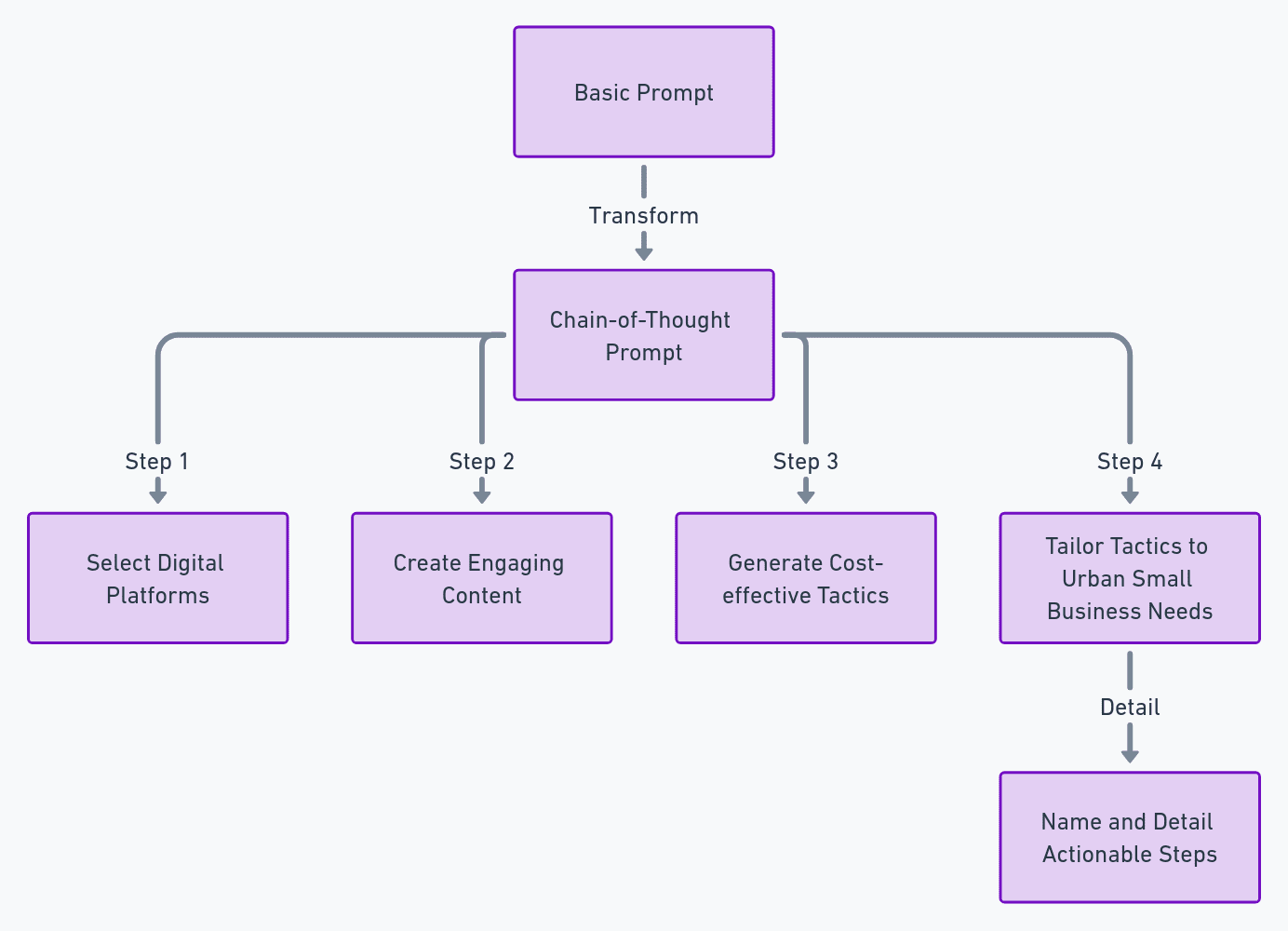

2. Chain-of-Thought Prompting

Another prompting technique discovered for its effectiveness by a group of University of Pittsburgh researchers is the Chain-of-Thought style. This technique employs a step-by-step approach that walks the model through the desired output structure. This logical approach helps the model craft a more relevant and structured response to a complex task or question.

Here’s an example of how to create a Chain-of-Thought style prompt based on the given template (using OpenAI’s ChatGPT with GPT-4):

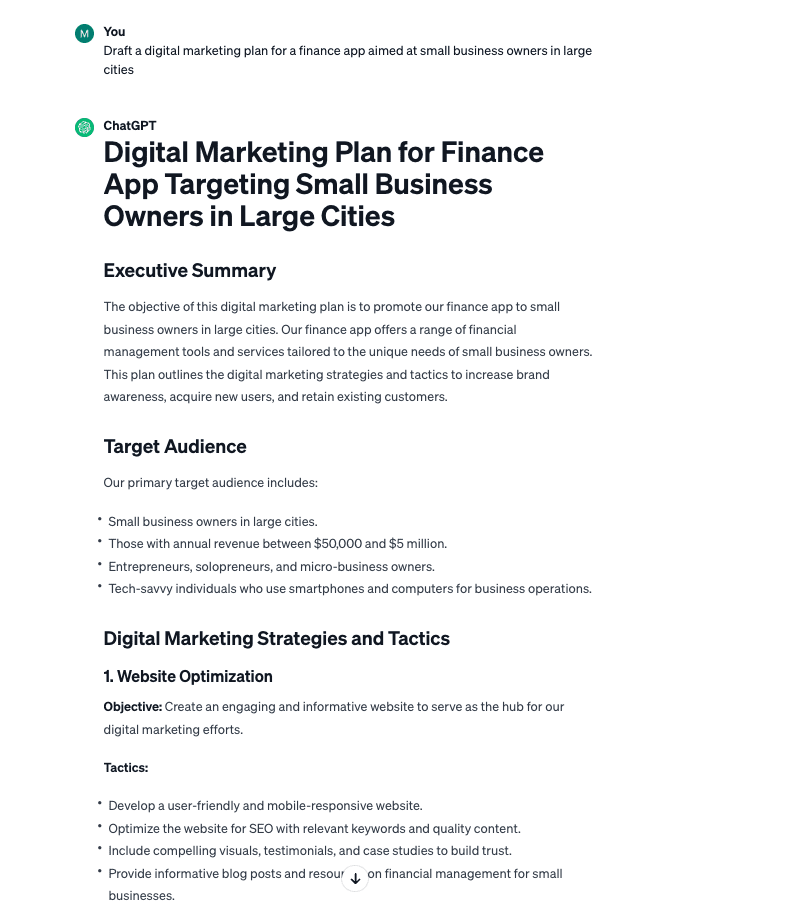

Basic Prompt: “Draft a digital marketing plan for a finance app aimed at small business owners in large cities.”

Chain of Thought Prompt:

“Outline a digital marketing strategy for a finance app for small business owners in large cities. Focus on:

- Selecting digital platforms that are popular among this business demographic.

- Creating engaging content like webinars or other relevant tools.

- Generating cost-effective tactics unique from traditional ads.

- Tailoring these tactics to urban small business needs in a way that increases customer conversion rates.

Name and detail each part of the plan with unique, actionable steps.”

The Chain of prompt technique generated a more precise and actionable result from a cursory look.

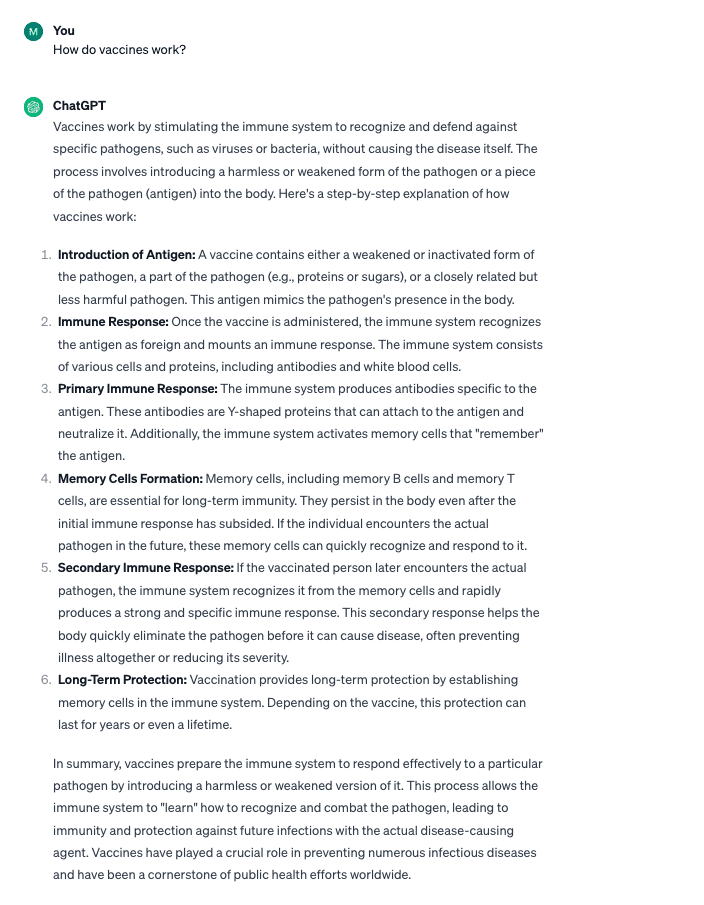

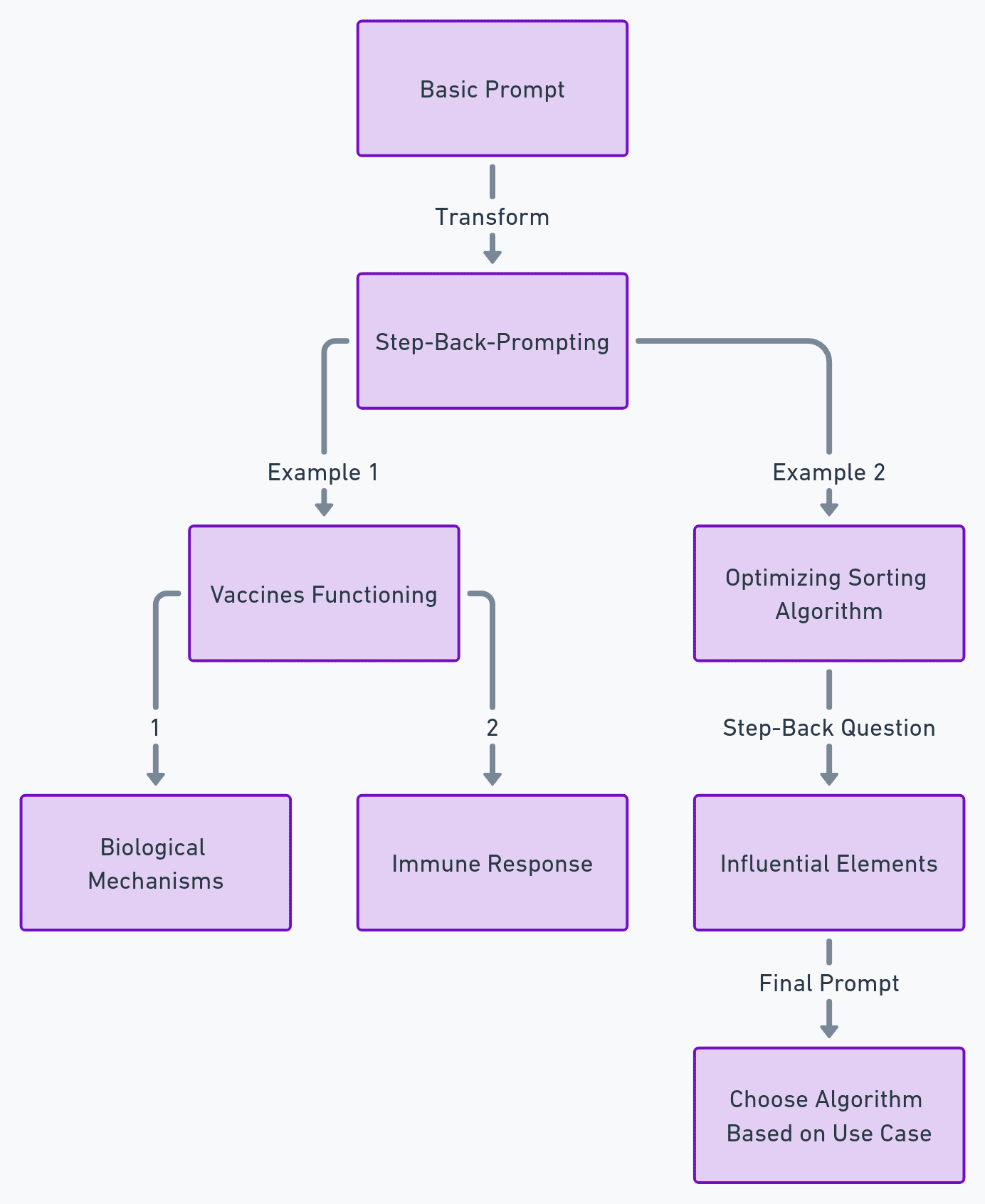

The Step-Back-Prompting technique, presented by seven of Google’s Deepmind Researchers, is designed to simulate reasoning when dealing with LLMs. This is similar to teaching a student the underlying principles of a concept before solving a complex problem.

To apply this technique, you need to point out the underlying principle behind a question before requesting the model to provide an answer. This ensures the model gets a robust context, which will help it give a technically correct and relevant answer.

Let’s examine two examples (using OpenAI’s ChatGPT with GPT-4):

Example 1:

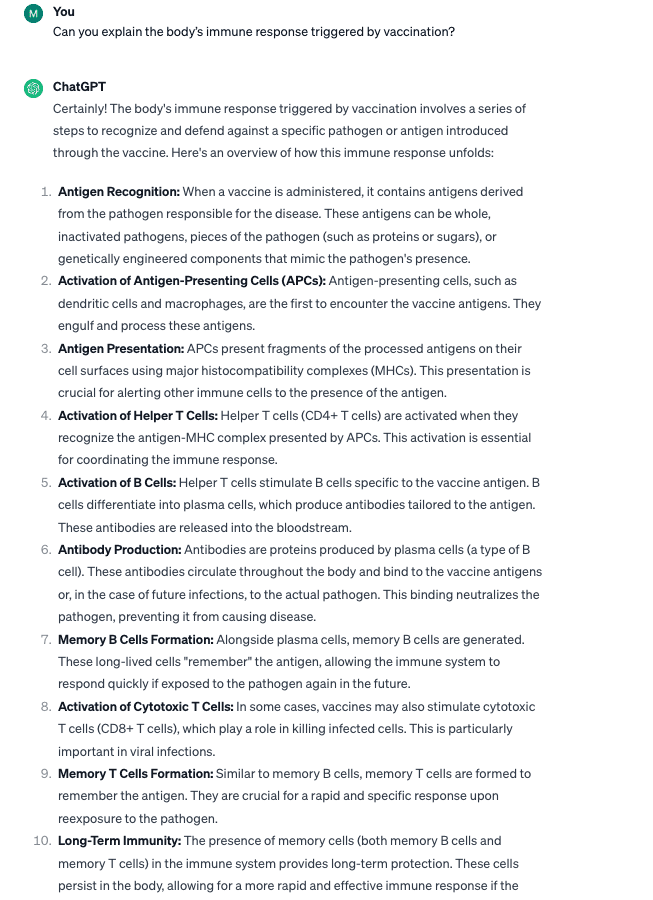

Basic Prompt: “How do vaccines work?”

Prompts using the Step-Back Technique

- “What biological mechanisms allow vaccines to protect against diseases?”

- “Can you explain the body’s immune response triggered by vaccination?”

While the basic prompt provided a satisfactory answer, using the Step-Back Technique provided an in-depth, more technical answer. This will be especially useful for technical questions you might have.

As developers continue to build novel applications for existing AI models, there is an increasing need for advanced prompting techniques that can enhance the abilities of Large Language Models to understand not just our words but the intent and emotion behind them to generate more accurate and contextually relevant outputs.