5 Ways of Converting Unstructured Data into Structured Insights with LLMs

Image by Author

In today’s world, we’re constantly generating information, yet much of it arises in unstructured formats.

This includes the vast array of content on social media, as well as countless PDFs and Word documents stored across organizational networks.

Getting insights and value from these unstructured sources, whether they be text documents, web pages, or social media updates, poses a considerable challenge.

However, the emergence of Large Language Models (LLMs) such as GPT or LlaMa has completely revolutionized the way we deal with unstructured data.

These sophisticated models serve as potent instruments for transforming unstructured data into structured, valuable information, effectively mining the hidden treasures within our digital landscape.

Let’s see 4 different ways to extract insights from unstructured data using GPT 👇🏻

Throughout this tutorial, we will be working with OpenAI’s API. If you don’t have one working account already, go check this tutorial on how to get your OpenAI API account.

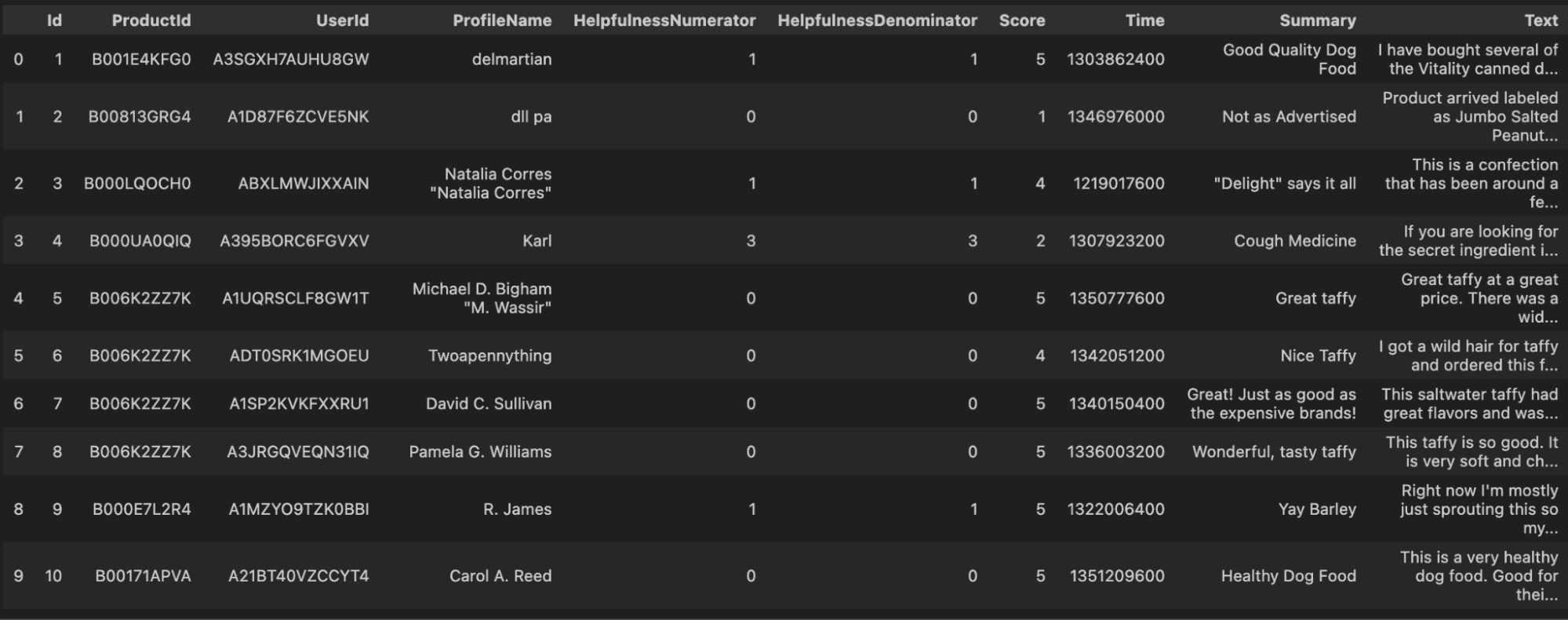

Imagine we are running e-commerce (Amazon in this case 😉), and we are the ones responsible for dealing with the millions of reviews that users leave on our products.

In order to demonstrate the opportunity LLMs represent to deal with such types of data, I am using a Kaggle dataset with Amazon reviews.

Original dataset

Structured data refers to data types that are consistently formatted and repeated. Classic examples include banking transactions, airline reservations, retail sales, and telephone call records.

This data typically arises from transactional processes.

Such data is well-suited to storage and management within a conventional database management system due to its uniform format.

On the other hand, text is often categorized as unstructured data. Historically, before the development of textual disambiguation techniques, incorporating text into a standard database management system was challenging due to its less rigid structure.

And this brings us to the following question…

Is text genuinely unstructured, or does it possess an underlying structure that’s not immediately apparent?

Text inherently possesses a structure, yet this complexity doesn’t align with the conventional structured format recognizable by computers. Computers are able to interpret simple, straightforward structures, but language, with its elaborate syntax, falls outside their field of comprehension.

So this brings us to a final question:

If computers struggle to process unstructured data efficiently, is it possible to convert this unstructured data into a structured format for better handling?

Manual conversion to structured data is time-consuming and has a high risk of human error. It’s often a mishmash of words, sentences, and paragraphs, in a wide variety of formats which makes it difficult for machines to grasp its meaning and to structure it.

And this is precisely where LLMs play a key role. Converting unstructured data into a structured format is essential if we want to work or process it somehow, including data analysis, information retrieval, and knowledge management.

Large Language Models (LLMs) like GPT-3 or GPT-4 offer powerful capabilities for extracting insights from unstructured data.

So our main weapons will be the OpenAI API and creating our own prompts to define what we need. Here are four ways you can leverage these models into getting structured insights from unstructured data:

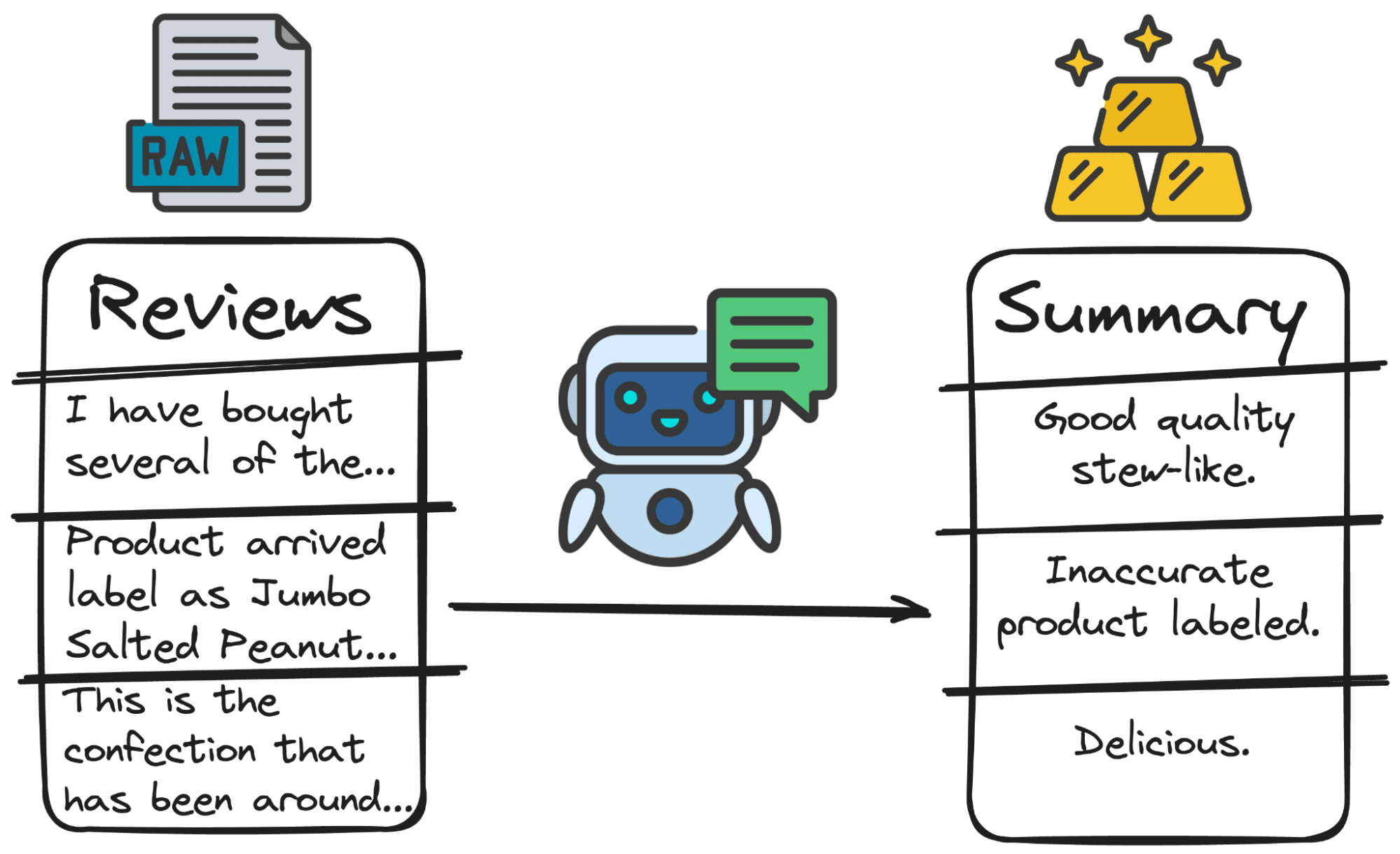

1. Text Summarization

LLMs can efficiently summarize large volumes of text, such as reports, articles, or lengthy documents. This can be particularly useful for quickly understanding key points and themes in extensive data sets.

In our case, it is way better to have a first summary of the review rather than the whole review. So, GPT can deal with it in seconds.

And our only – and most important task – will be crafting a good prompt.

In this case, I can tell GPT to:

Summarize the following review: \"{review}\" with a 3 words sentence.

So let’s put this into practice with a few lines of code.

Code by Author

And we will get something like follows…

Image by Author

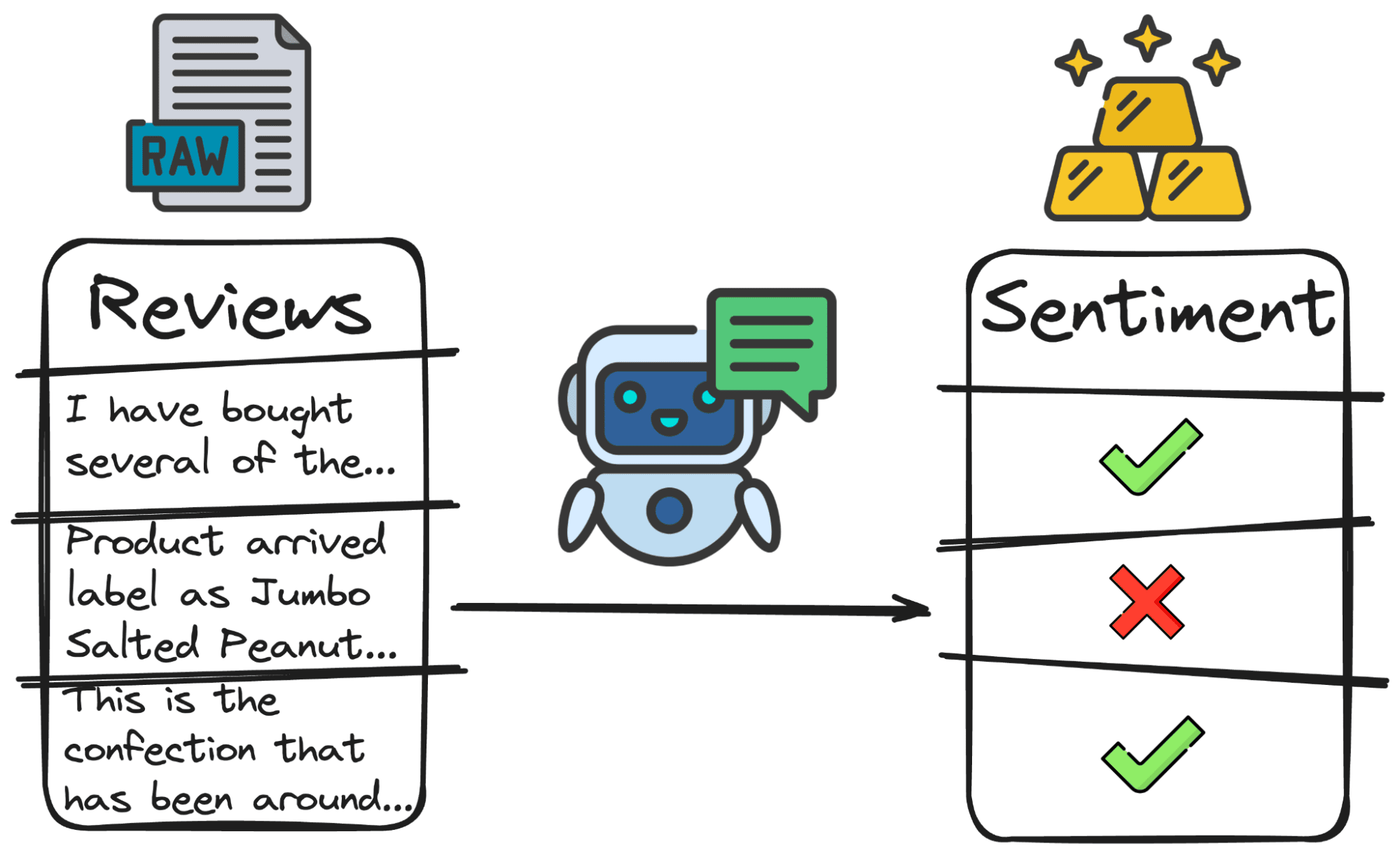

2. Sentiment Analysis

These models can be used for sentiment analysis, determining the tone and sentiment of text data such as customer reviews, social media posts, or feedback surveys.

The most simple, yet most used, classification of all time is polarity.

- Positive reviews or why are people happy with the product.

- Negative reviews or why are they upset.

- Neutral or why people are indifferent with the product.

By analyzing these sentiments, businesses can gauge public opinion, customer satisfaction, and market trends. So, instead of having a person decide for each review, we can have our friend GPT to classify them for us.

So, again the main code will consist of a prompt and a simple call to the API.

Let’s put this into practice.

Code by Author

And we would obtain something as follows:

Image by Author

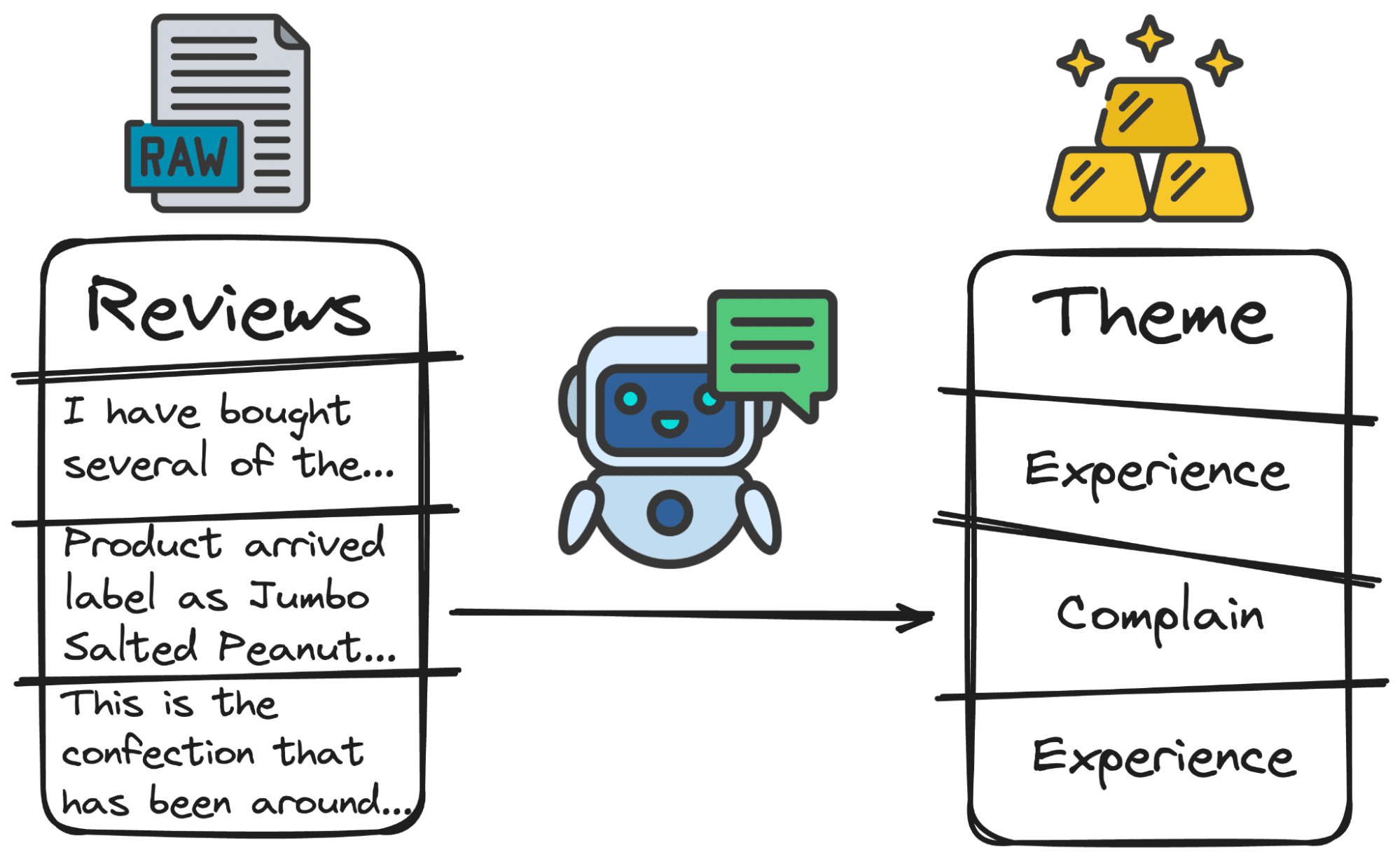

3. Thematic Analysis

LLMs can identify and categorize themes or topics within large datasets. This is particularly useful for qualitative data analysis, where you might need to sift through vast amounts of text to understand common themes, trends, or patterns.

When analyzing reviews, it can be useful to understand the main purpose of the review. Some users will be complaining about something (service, quality, cost…), some users will be rating their experience with the product (either in a good or a bad way) and some others will be performing questions.

Again, doing manually this work would suppose a lot of hours. But with our friend GPT, it only takes a few lines of code:

Code by Author

Image by Author

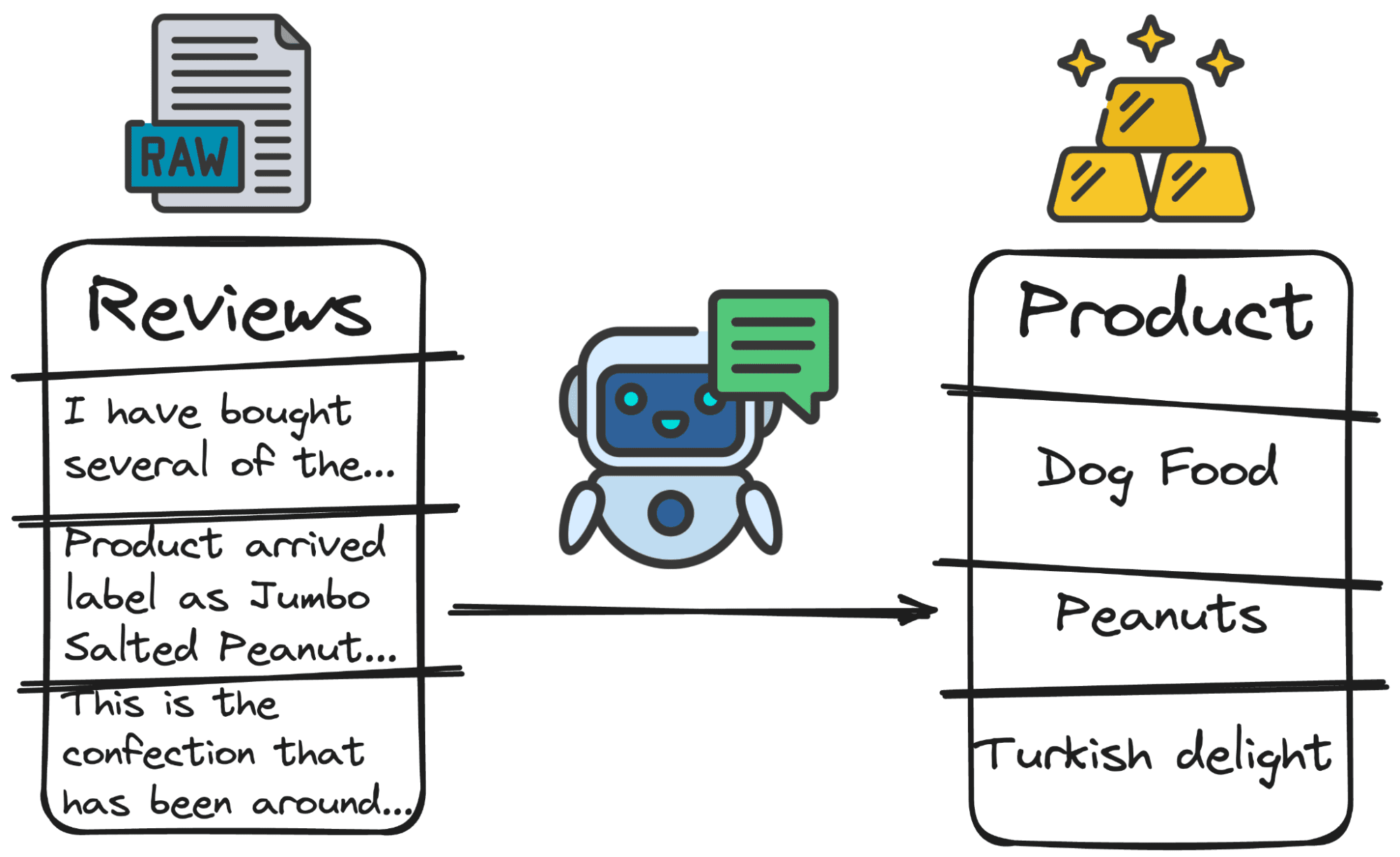

4. Keyword extraction

LLMs can be used to extract keywords. This means, detecting any element we ask for.

Imagine for instance that we want to understand if the product where the review is attached is the product the user is talking about. To do so, we need to detect what product is the user reviewing.

And again… we can ask our GPT model to find out the main product the user is talking about.

So let’s put this into practice!

Code by Author

Image by Author

In conclusion, the transformative power of Large Language Models (LLMs) in converting unstructured data into structured insights cannot be overstated. By harnessing these models, we can extract meaningful information from the vast sea of unstructured data that flows within our digital world.

The four methods discussed – text summarization, sentiment analysis, thematic analysis and keyword extraction – demonstrate the versatility and efficiency of LLMs in handling diverse data challenges.

These capabilities enable organizations to gain a deeper understanding of customer feedback, market trends, and operational inefficiencies.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is currently working in the Data Science field applied to human mobility. He is a part-time content creator focused on data science and technology. You can contact him on LinkedIn, Twitter or Medium.