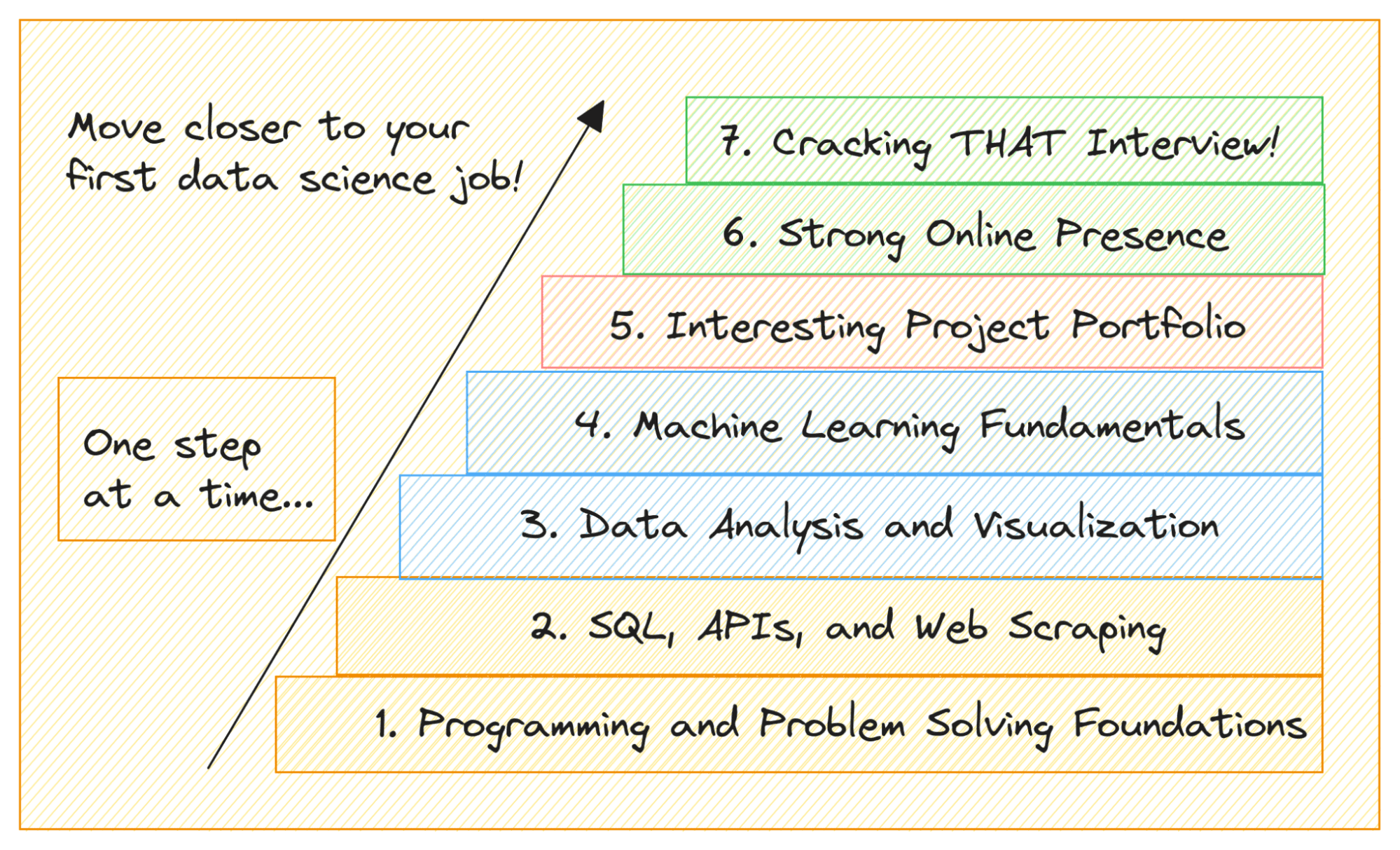

7 Steps to Landing Your First Data Science Job

Image by Author

Are you looking to switch to a data science career? If so, chances are you’ve already signed up for an online course, a bootcamp, or the like. Perhaps, you’ve bookmarked a self-study data science roadmap that you’re planning to work through. So how will yet another guide—this guide—help you?

If you’ve decided to pursue a data science career, you must work towards it. There’s no other way. Also, landing a data science job is much more than simply learning data science concepts. And even in the process of learning, the concepts, tools, techniques, and libraries that you need to learn can be overwhelming.

This article is not a clickbait; so, no tall promises to help you land your data science job in X days. Rather we provide a holistic approach to the data science job search process. Which includes:

- Learning data science concepts

- Working on projects to showcase your technical expertise

- Marketing yourself as a professional

- Preparing strategically for interviews

We hope this guide helps you along the way!

To break into data science, you should first develop a solid foundation in programming and problem solving. I suggest learning Python as your first language.

With its easy-to-follow syntax and several great learning resources, you can get up and running with Python in a few hours. You can then spend a few weeks focusing on the following programming fundamentals with Python:

- Built-in data structures

- Loops

- Functions

- Classes and objects

- Functional programming basics

- Pythonic features: comprehensions and generators

If you want a quickstart guide to Python, do this Python lecture in Harvard’s CS50 course. For more immersive learning, check out Introduction to Programming with Python, also a free course from Harvard.

For practice you can work through the projects in the above Python course and also practice solving a few problems on Hackerrank.

Also, at this stage, you should be comfortable working at the command line. It’s also helpful to learn how to create and work with virtual environments in Python.

Regardless of what data role you’re applying to, learning and gaining proficiency in SQL is super important. You can start with the following topics:

- Basic SQL queries

- Conditional filtering

- Joins

- Subqueries

- SQL string functions

As with Python, SQL also requires dedicated practice and there are several helpful platforms to practice SQL. If you prefer a tutorial to work through, check out SQL tutorial by Mode Analytics.

You now have your Python foundations down, so you can build on that by learning web scraping with Python. Because as a data professional, you should be comfortable with data collection. Specifically, scraping the web programmatically and parsing JSON responses from APIs.

After familiarizing yourself with basic HTTP methods, you can build on your Python skills by learning:

- HTTP requests with the Requests library

- Web scraping with the BeautifulSoup Python library; Learning Scrapy will also be helpful

- Parsing JSON responses from APIs using functionality from the built-in json module

At this point, you can try to code a simple web scraping project. Keep it simple yet relatable so that you’re interested. Say, you want to scrape your shopping data from Amazon so that you can analyze it later. This is just an example; you can work on a project that interests you.

At this point in your data science learning journey, you should be comfortable with both Python and SQL. With these foundational skills, you can now proceed to analyze and visualize data to understand it better:

- For data analysis with Python, you can learn to use the pandas library. If you’re looking for a step-by-step learning guide for pandas, check out 7 Steps to Mastering Data Wrangling with Pandas and Python.

- For data visualization, you can learn to work with the matplotlib and seaborn libraries.

This free Data Analysis with Python certification course from freeCodeCamp covers all the essential Python data science libraries that you need to know. You’ll also get to code some simple projects.

Here again, you have the opportunity to build a project:try to collect data using web scraping; analyze it using pandas; learn a library like Streamlit to create an interactive dashboard to present the results of your analysis.

With programming and data analysis, you can build interesting projects. But it’s also helpful to learn machine learning fundamentals.

Even if you don’t have the time to learn the working of the algorithms in greater detail focus on:

- gaining a high-level overview how the algorithm works and

- building models using scikit-learn

This scikit-learn crash course will help you get up to speed on building machine learning models with scikit-learn. Once you learn how to build a baseline model with scikit-learn, you should also focus on the following to help you build better models:

- Data preprocessing

- Feature engineering

- Hyperparameter tuning

Now again it’s time to build projects. You can start with something simple like a loan default prediction project and gradually move to employee attrition prediction, market basket analysis, and more.

In the previous steps, we did talk about building projects to reinforce learning. However most aspiring data professionals tend to focus more on the learning and overlook this step of building a portfolio of interesting projects—the applying part.

No matter how much you learn (and know), if you don’t have projects showcasing your proficiency, it’s not possible to convince recruiters of your expertise.

Because of the amount of front-end coding that goes into creating a simple page to showcase projects, most learners do not build a portfolio. You probably use GitHub repository—with an informative README file—to track changes to your project’s code. However, to build out a data science portfolio showcasing your projects, you can check out other free platforms such as Kaggle and DataSciencePortfol.io.

Choose your projects depending on which domain you’d like to land a data science role in: heathcare, FinTech, supply chain, and much more. So that you can demonstrate both your interest and proficiency. Alternatively, you can try building out a few projects to figure out your domain of interest.

Getting found online and showcasing your experience are both helpful in the job search process, especially in the early stages of your career. That is why building a strong online presence is our next step.

To this end, the best path is to build your own personal website with:

- An informative “About” page and contact info

- A blog that features articles and tutorials you write

- A project page with details of projects you’ve worked on

Having a personal website is always preferred. But at the least you should have a LinkedIn profile and Twitter (now X) handle when you’re in the job search process.

On Twitter, add a relevant headline, and engage meaningfully with technical and career advice shared. On LinkedIn ensure that you profile is as complete as possible and accurate:

- Update your headline to reflect your professional expertise

- Fill out the experience and education sections

- In the “Projects” section, add your projects with a short description. Also link to the projects

- Add your published articles to your profile

Be proactive when networking on these platforms. Also share your learning periodically. If you don’t want to start writing on your own blog just yet, try writing on socials to work on your writing skills.

You can write a LinkedIn post or an article about a data science concept you just learned or a project you’re working on. Or tweet about what you’re learning and mistakes you make along the way, and what you learned from them.

Notice how this step is not completely separate from building your project portfolio. In addition to working on your technical skills and building projects (yes, your portfolio), you also want to build your online presence. So that recruiters can find you and reach out with relevant opportunities when they are looking for candidates.

To crack data science interviews, you need to work on both coding rounds that test your problem-solving skills as well as core technical interviews where you should be able to showcase your understanding of data science.

I recommend taking at least an introductory course on data structures and algorithms and then solving problems on Hackerrank and Leetcode. If you are running short of time, you can work through a problem set such as Blind 75. This problem set contains questions across all major concepts like arrays, dynamic programming, strings, graphs, and more.

In all data science interviews, you’ll have an SQL round at the very least. You can practice SQL on Hackerrank and Leetcode too. In addition, you can solve previously asked interview questions on platforms like StrataScratch and DataLemur.

Once you crack these coding interviews and proceed to the next rounds, you should be able to demonstrate your proficiency over data science. You should know your projects in great detail. When explaining projects you’ve worked on, you should also be able to explain:

- The business problem you’ve attempted to solve

- Why you approached it the way you did

- How and why the approach is good

Focus on preparing not only from an algorithms and concepts perspective but also from that of understanding business objectives and solving business problems.

And that’s a wrap. In this guide, we discussed the different steps to landing your first data science role.

We also went over the importance of marketing yourself as a professional and prospective candidate in addition to learning data science concepts. For steps that involved learning data science concepts, we also looked at helpful resources.

Good luck on your data science journey!

Bala Priya C is a developer and technical writer from India. She likes working at the intersection of math, programming, data science, and content creation. Her areas of interest and expertise include DevOps, data science, and natural language processing. She enjoys reading, writing, coding, and coffee! Currently, she’s working on learning and sharing her knowledge with the developer community by authoring tutorials, how-to guides, opinion pieces, and more.