NVIDIA’s Chat with RTX is an AI chatbot that runs offline on your personal files

TL;DR

- NVIDIA has released a new free tool called “Chat with RTX” that allows GeForce RTX 30 series and up owners to run a chatbot on their own content from their own PC.

- Users can feed in their own files or point it to YouTube videos and playlists. They can then ask queries to get answers from these sources.

- Chat with RTX runs offline, thus inherently more private than online AI chatbots like ChatGPT and Gemini.

AI has permeated all aspects of our life. Between ChatGPT and Gemini, practically all of us have access to a smart AI virtual assistant that can scour the internet for us and deliver relevant information within seconds. But where these free AI assistants fumble is when you need actions on your own dataset. Running your own AI chatbot locally isn’t everyone’s cup of tea, and subscribing to higher tiers may also not be an option for some. NVIDIA is making it significantly easier for GeForce GPU owners to run their own AI chatbot with the new Chat with RTX tool.

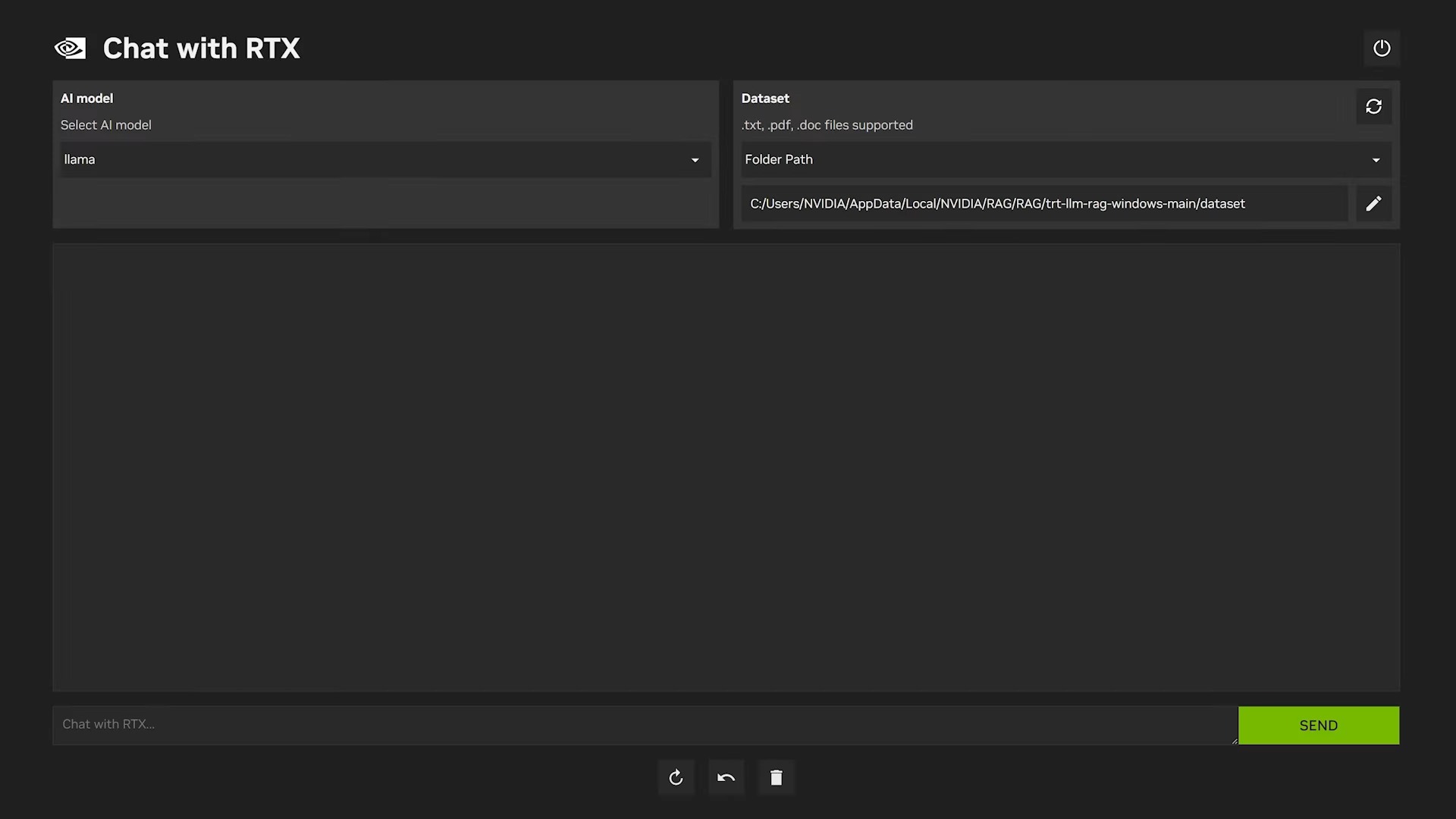

Chat with RTX is a free tool offered by NVIDIA that lets users personalize a chatbot with their own content. The queries are accelerated by the NVIDIA GPU on your Windows PC, with support restricted to GeForce RTX 30 series and higher with at least 8GB of VRAM. You also need Windows 10 or higher and the latest NVIDIA GPU drivers. NVIDIA also clarifies that the tool is a tech demo, so don’t expect ChatGPT-level of polish right out of the gate.

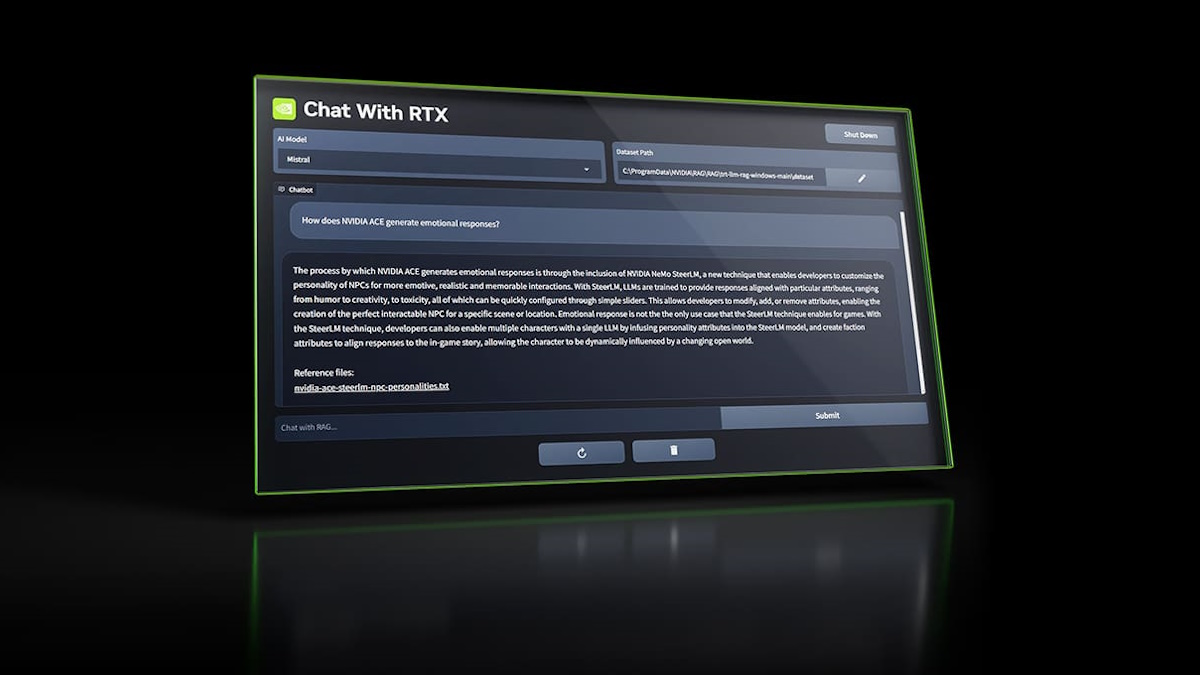

Chat with RTX essentially brings generative AI capabilities to your own PC. You can feed in your own files as the dataset and use open-source large language models like Mistral or Meta’s Llama 2 to enable queries for quick and contextually relevant answers.

You can feed Chat with RTX various file formats, including .txt, .pdf, .doc/.docx, and .xml. You can also feed it information from YouTube videos and playlists. Since Chat with RTX runs locally, your data stays on your device, which is perfect if you want to process sensitive data offline.

NVIDIA does note some limitations for Chat with RTX. Chat with RTX cannot remember context, so you cannot ask follow-up questions. The tool also works better if you are looking for facts spread across a few documents rather than asking for a summary of a few documents. NVIDIA is content with calling this a tech demo, but it’s still a great tool to start with.